Controllable generation is considered a potentially vital approach to address the challenge of annotating 3D data, and the precision of such controllable generation becomes particularly imperative in the context of data production for autonomous driving. Existing methods focus on the integration of diverse generative information into controlling inputs, utilizing frameworks such as GLIGEN or ControlNet, to produce commendable outcomes in controllable generation. However, such approaches intrinsically restrict generation performance to the learning capacities of predefined network architectures. In this paper, we explore the integration of controlling information and introduce PerLDiff (\textbf{Per}spective-\textbf{L}ayout \textbf{Diff}usion Models), a method for effective street view image generation that fully leverages perspective 3D geometric information. Our PerLDiff employs 3D geometric priors to guide the generation of street view images with precise object-level control within the network learning process, resulting in a more robust and controllable output. Moreover, it demonstrates superior controllability compared to alternative layout control methods. Empirical results justify that our PerLDiff markedly enhances the precision of generation on the NuScenes and KITTI datasets.

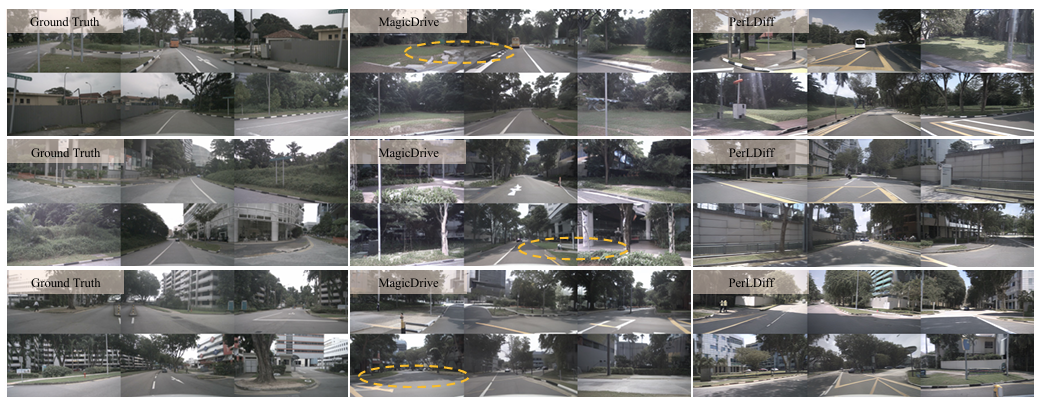

PerLDiff demonstrates superior performance by generating images consistent with ground truth road information.

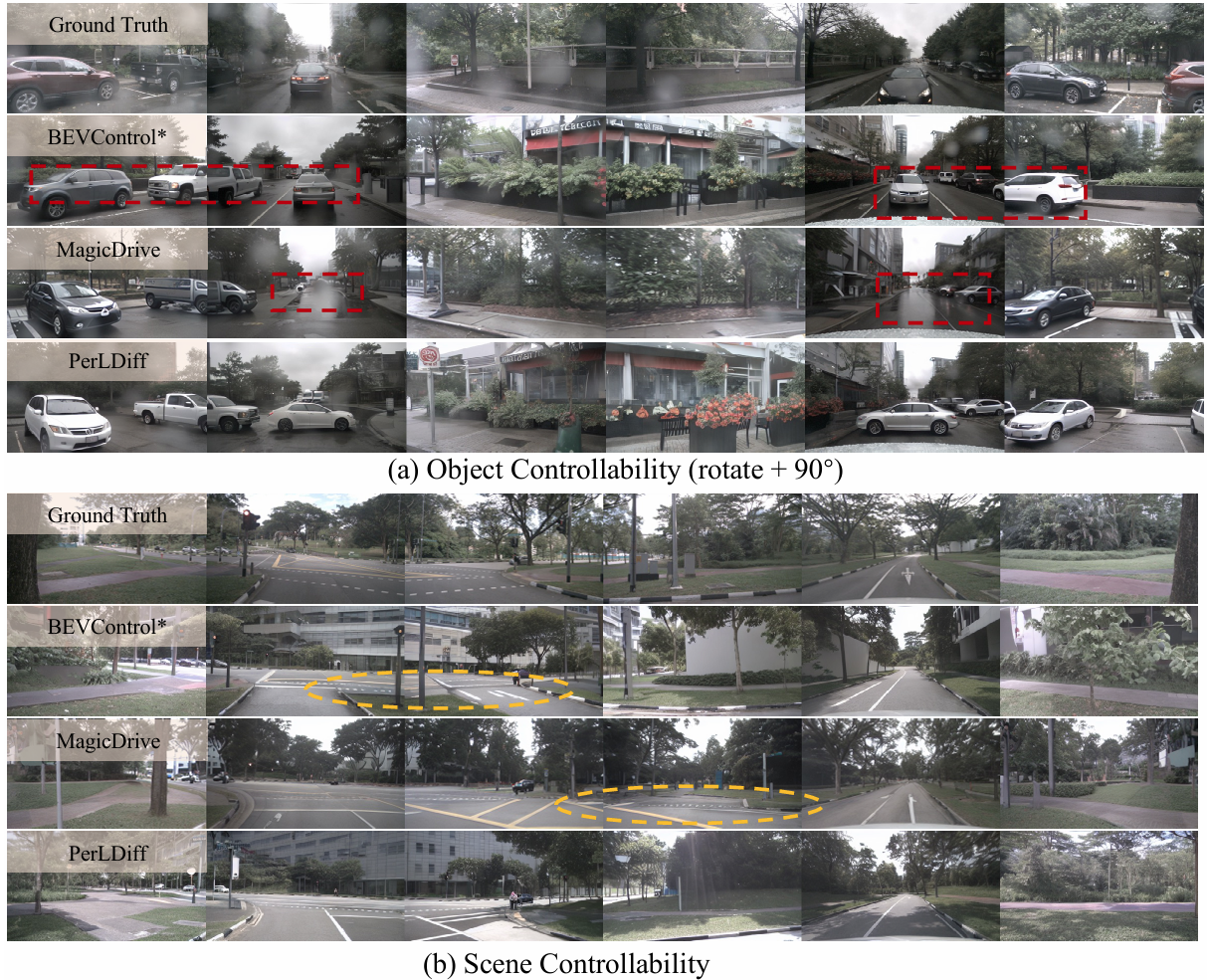

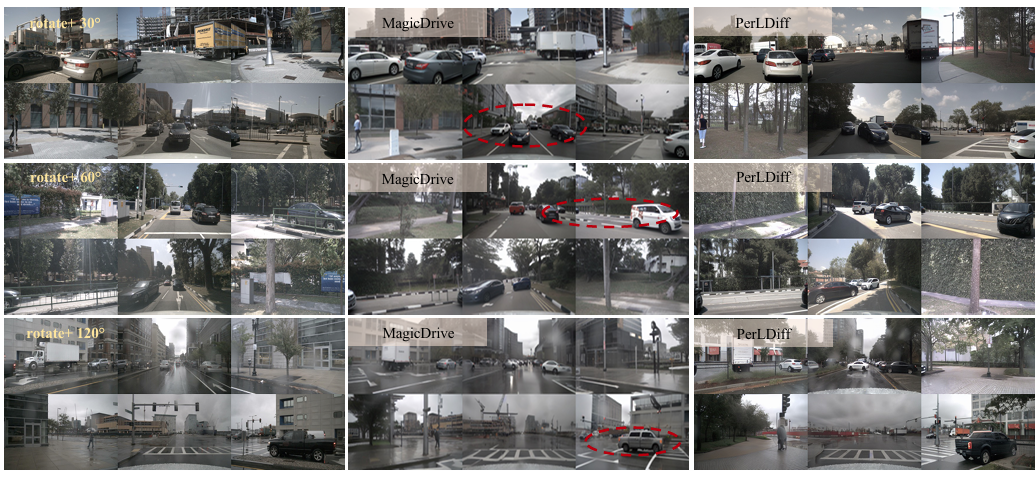

PerLDiff exhibits superior performance by generating objects at arbitrary angles.

Qualitative visualizations on day, night, and rain scenarios synthesized.

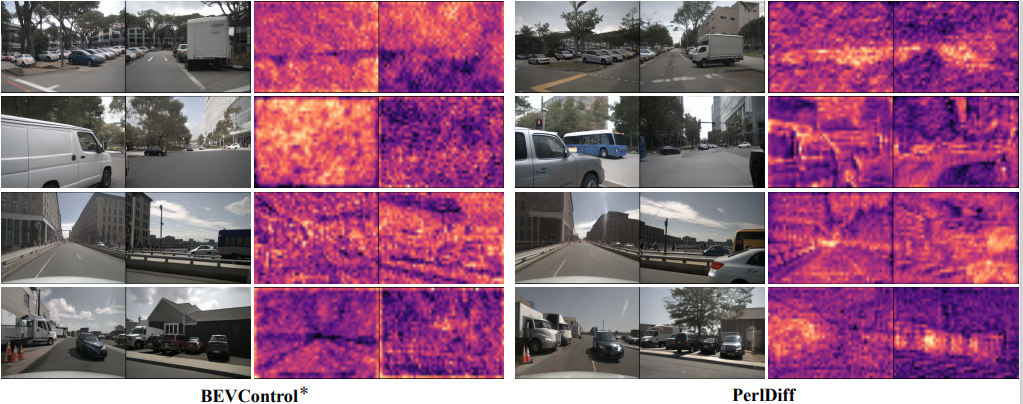

BEVControl* produces disorganized and vague attention maps. PerlDiff fine-tunes the response within the attention maps, resulting in more accurate control information at the object level.

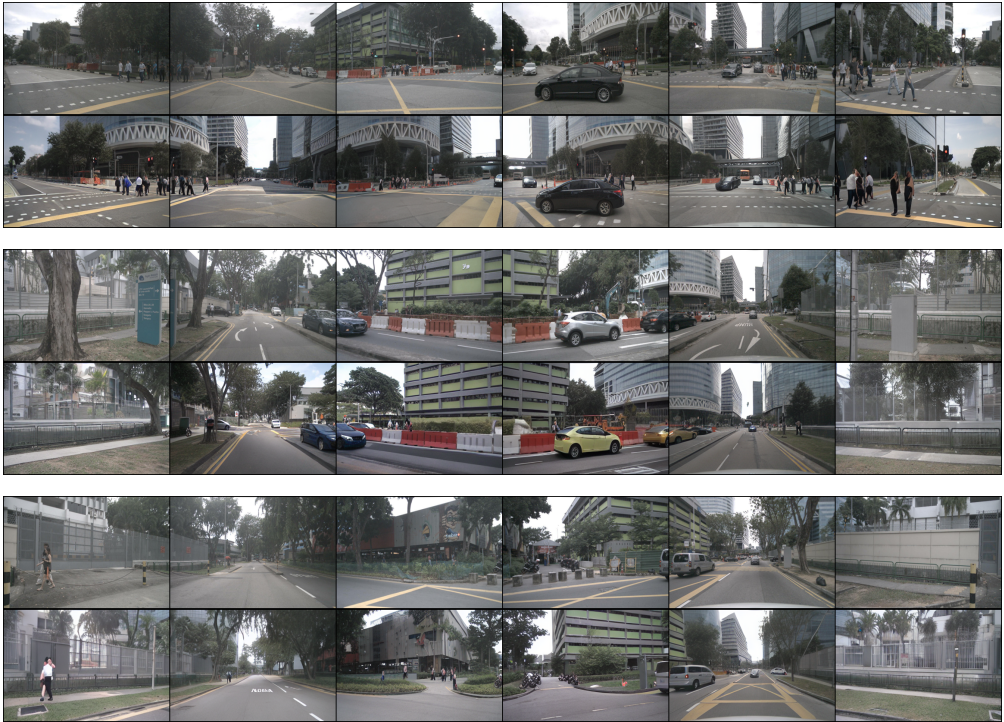

Visualization of street view images generated on NuScenes validation dataset. (show the ground truth (1st row))

Visualization of street view images generated on KITTI validation dataset. (show the ground truth (left))

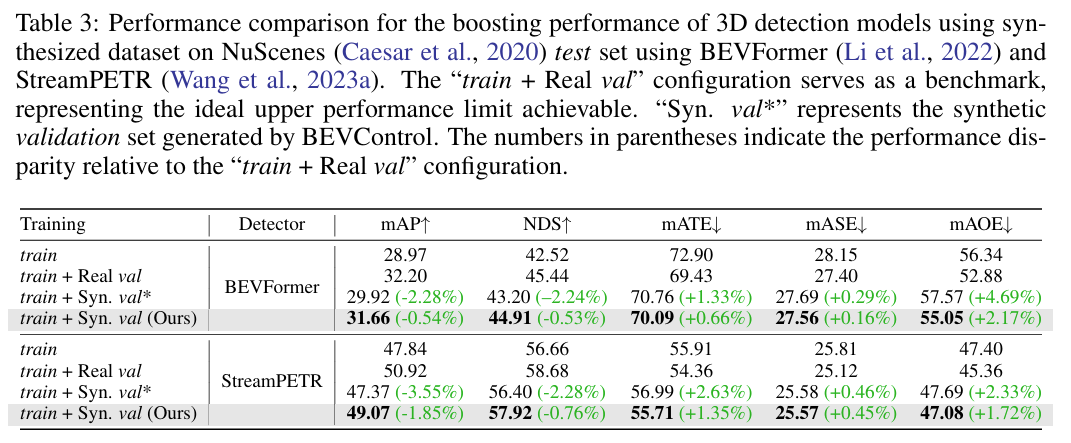

Generation from PerlDiff can be used as data augmentation, supporting various 3D object detection tasks such as BEVFormer and StreamPETR.

@article{zhang2024perldiff,

title={PerlDiff: Controllable Street View Synthesis Using Perspective-Layout Diffusion Models},

author={Zhang, Jinhua and Sheng, Hualian and Cai, Sijia and Deng, Bing and Liang, Qiao and Li, Wen and Fu, Ying and Ye, Jieping and Gu, Shuhang},

journal={arXiv preprint arXiv:2407.06109},

year={2024}

}